In cybersecurity, what’s public isn’t always obvious. Sometimes, what feels like a private interaction quietly becomes a digital confession; broadcasted to the entire internet with a single click.

As of last night, I confirmed something trivial at first glance but deeply alarming upon scrutiny: Google is indexing ChatGPT conversations shared via the public “share” links. These aren’t leaks in the traditional sense; there’s no zero-day, no CVE, or no bypass, but it’s a feature that’s playing Russian Roulette with sensitive data, causing serious security implications.

Let’s unpack what this means for data privacy, security hygiene, and how a well-meaning design opens the floodgates to public exposure.

The Feature That “Works As Intended”

The “share” feature on ChatGPT allows users to generate a unique link containing a UUID. On paper, this seems secure. A 128-bit UUID offers a gargantuan keyspace, rendering brute-force discovery nearly impossible. For instance:

https://chatgpt.com/share/673e8cd2-e738-8009-95e9-1cc04330bdc3

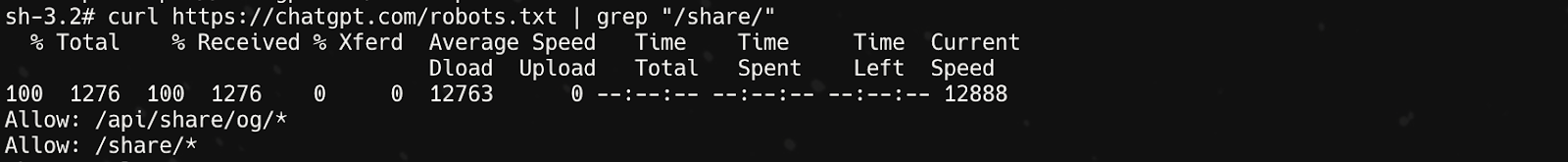

This design leads users to assume these links are “unlisted”, like a private YouTube video. But here’s the catch: OpenAI’s robots.txt explicitly allows search engines to crawl the /share/path. That means if these links appear anywhere public (forums, blogs, Slack channels, even public Trello boards), they’re fair game for Google’s spiders.

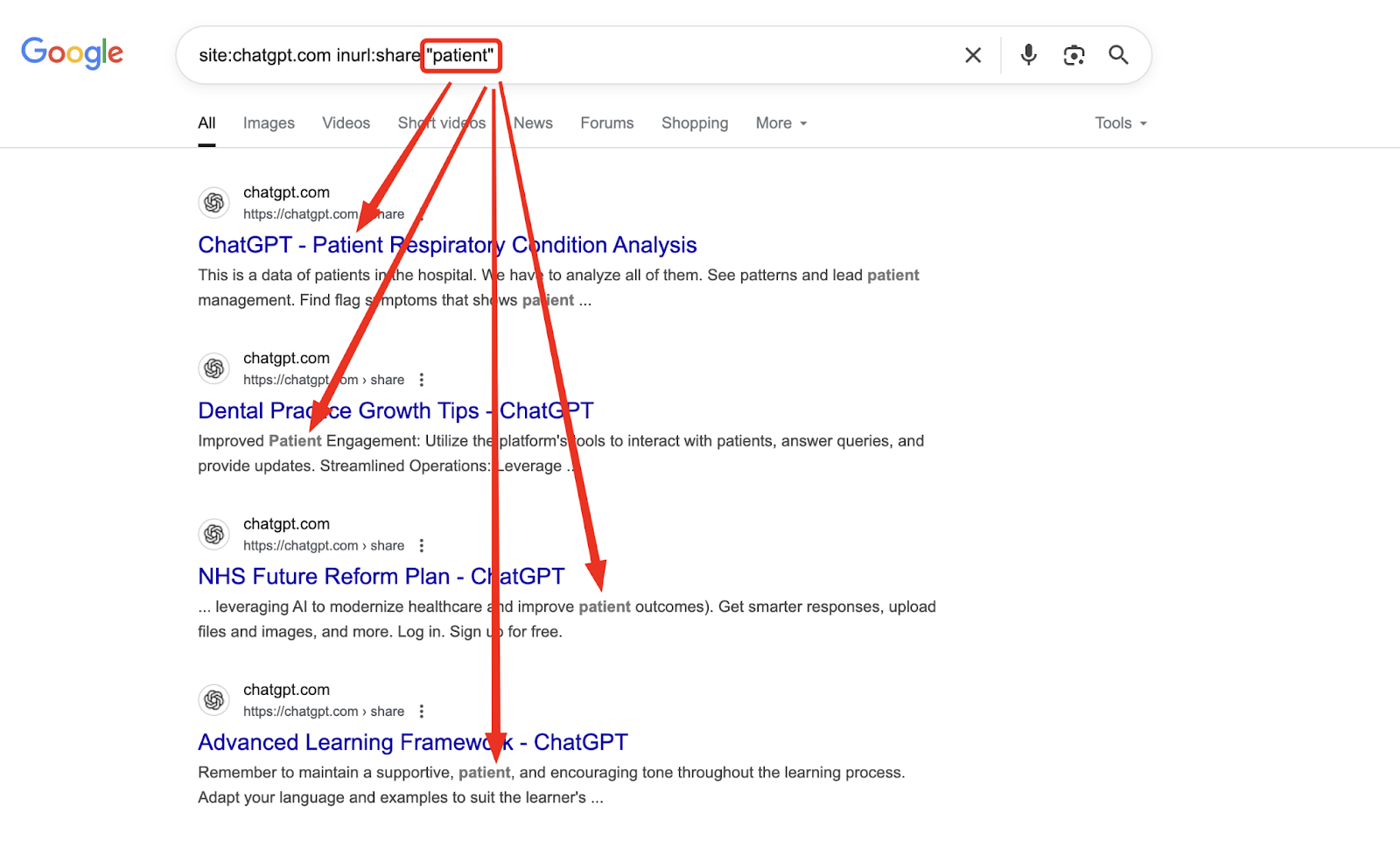

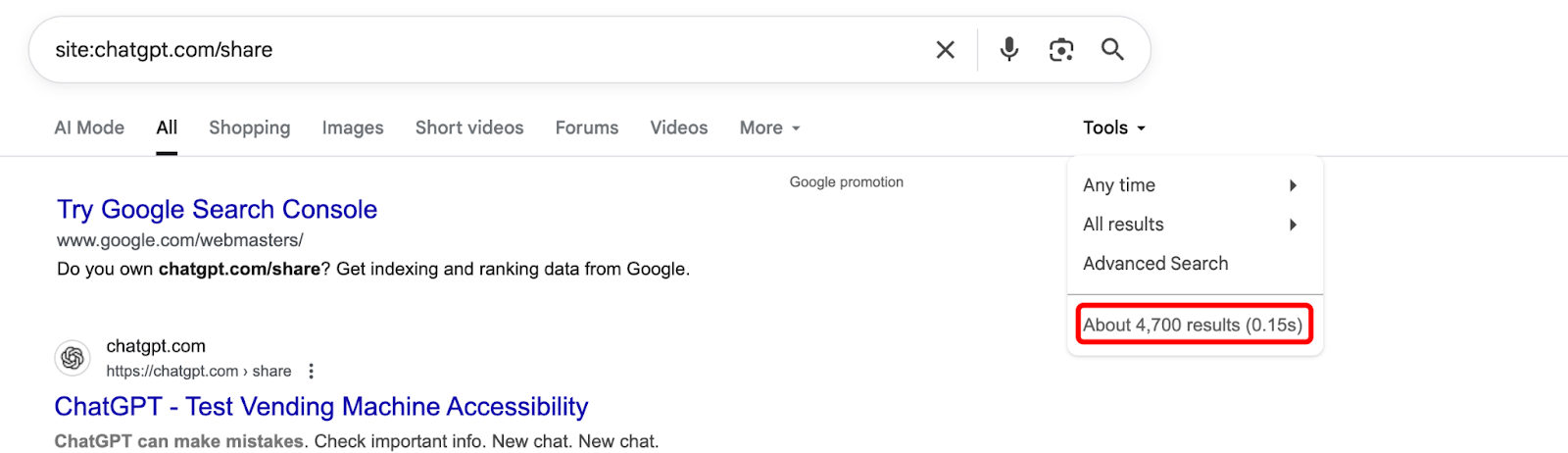

Result? Thousands of ChatGPT conversations are now publicly searchable with the right dorking.

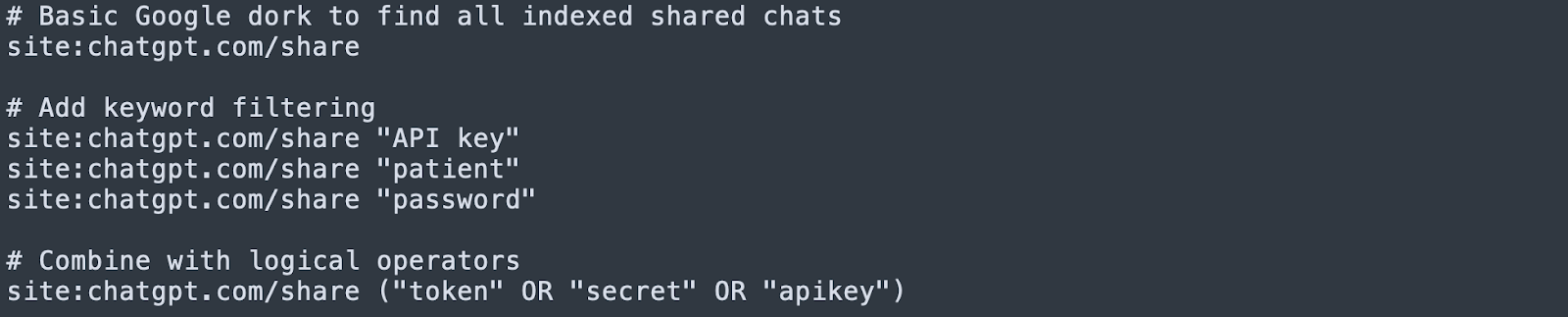

Try this:

site:chatgpt.com/share-confidential-password

…and welcome to an unintentional pastebin of sensitive thought processes.

From Pastebin to Privacy Nightmare

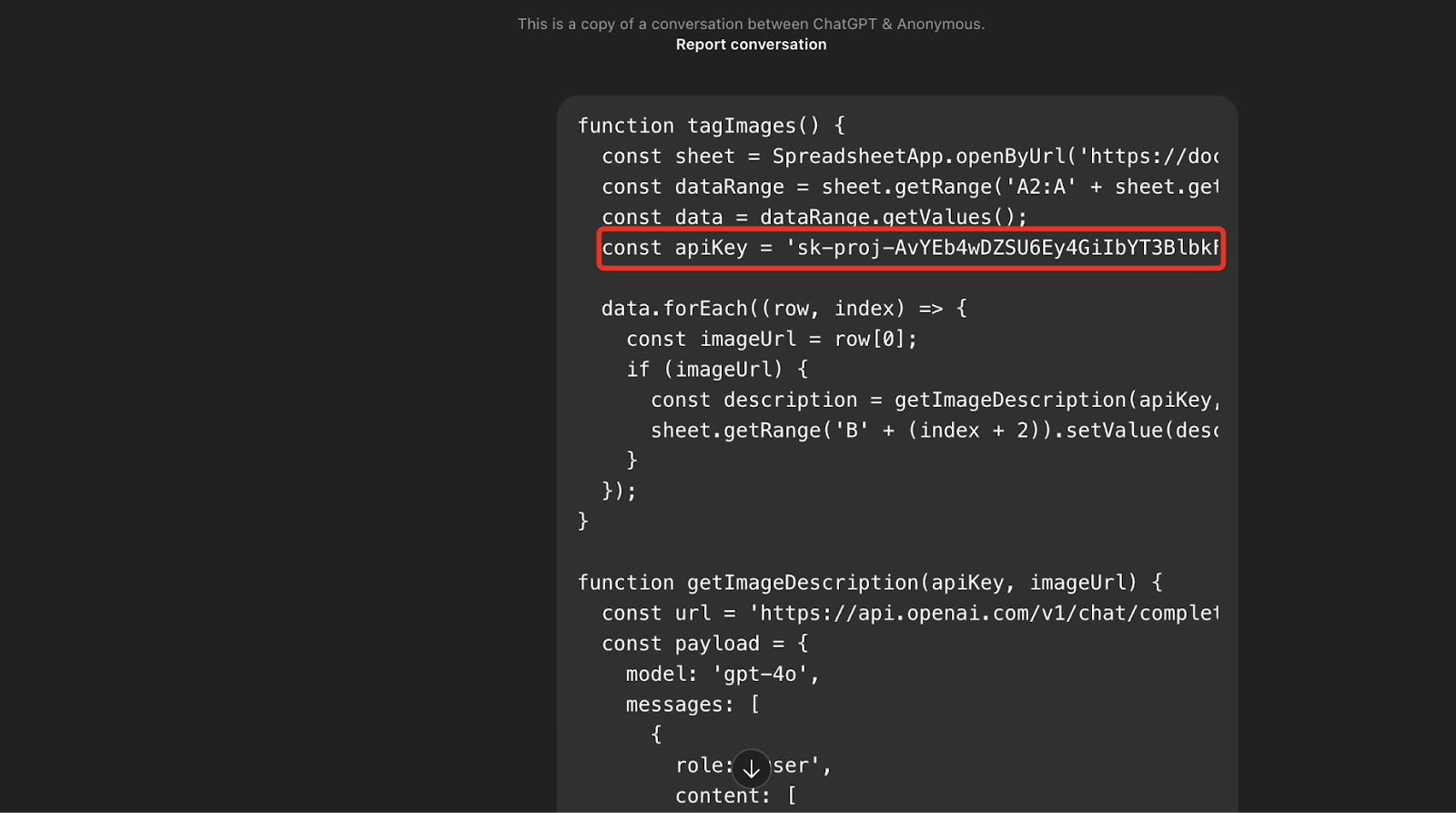

Within minutes of testing this hypothesis, I retrieved conversations discussing internal APIs, embedded credentials, source code snippets, and even PHI-like data in a few extreme cases.

In one conversation, a user had shared a working ChatGPT API key in the prompt history. In another, what appeared to be a breakdown of a healthcare billing workflow was sitting wide open!

Let me be very clear. This isn’t a vulnerability in OpenAI’s system. It’s a perfect storm of:

- A share feature designed for ease.

- Publicly accessible URLs.

- Indexing allowed by default.

- Users who assume “only the recipient will see this.”

But security isn’t just about what can be done; it’s about what will inevitably go wrong when you mix frictionless features with human behavior.

This is that case study.

Why Is This A Real Security Concern?

Most users don’t think like adversaries. They don’t understand the implications of copy-pasting sensitive data into an AI chatbot and then hitting “share.” To them, it’s a quick way to send a friend or colleague a conversation. To Google, it’s crawlable content. To an attacker? A buffet of digital breadcrumbs.

Consider the following real-world risk vectors:

- Credential exposure: API keys, auth tokens, and OAuth secrets are often dropped into ChatGPT while debugging.

- System details: Developers paste error logs or infrastructure paths.

- PII & PHI: Marketers, analysts, or customer support reps summarize client data during AI-assisted drafting.

- Insider intel: Strategy discussions, pre-launch features, and even legal drafts.

Treating “share” as private is like uploading your AWS keys to an unlisted Google Drive folder and thinking you’re safe.

Blame Game: OpenAI v/s User Responsibility

Some might argue: “This isn’t OpenAI’s fault. Users shouldn’t share sensitive stuff.” That’s the classic blame the user defense. And while there’s truth to it, it ignores the deeper issue:

“Security failures rarely arise from evil intentions; they come from optimistic defaults.”

— Every frustrated pentester, ever.

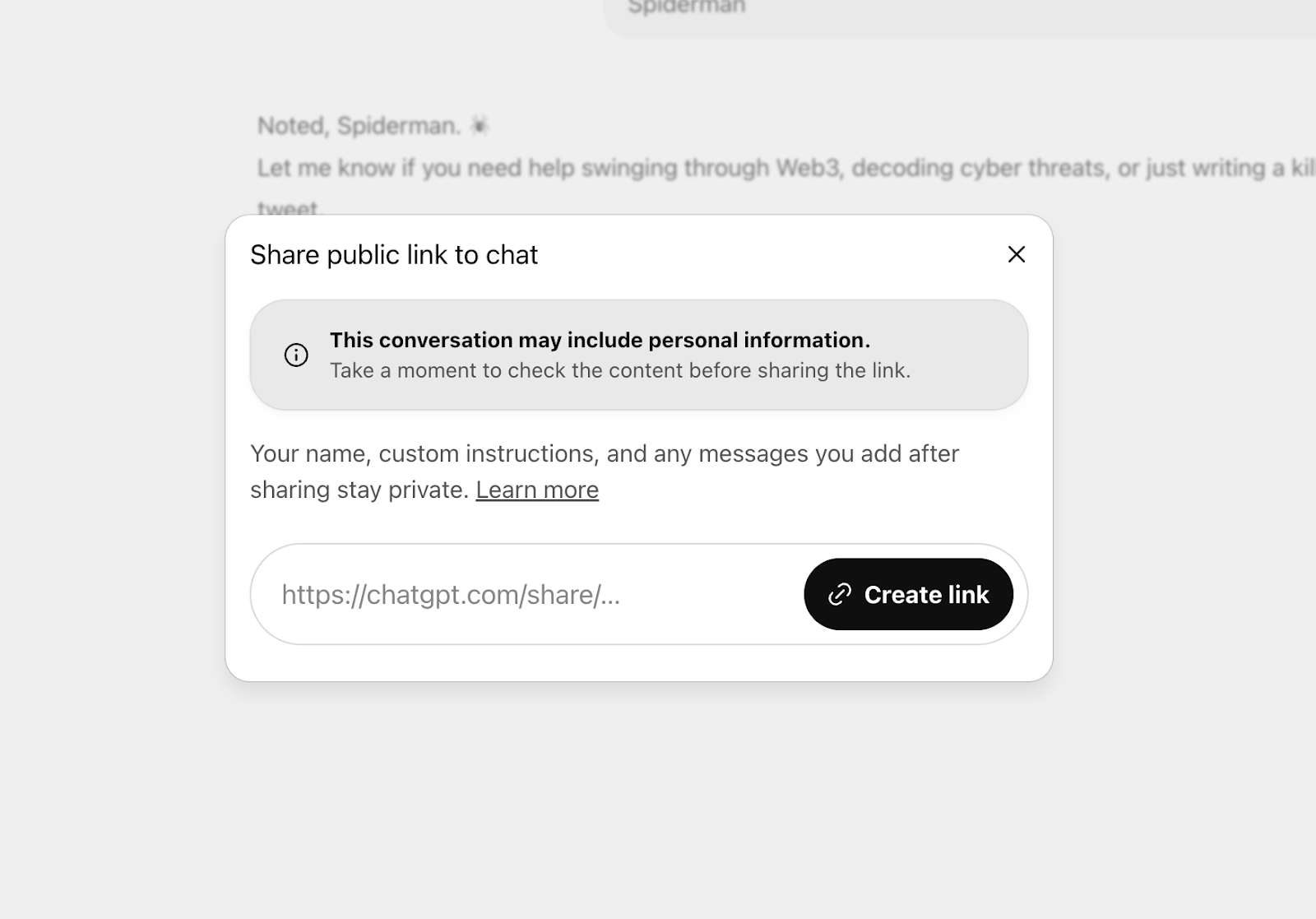

OpenAI didn’t hide anything. The feature behaves like a public pastebin. But that’s precisely the problem. There’s zero friction between “I need to share this with a teammate” and “I just published this to the web.”

There’s no big red warning. No option to toggle indexing. No ephemeral sharing window. No audit trail. Nothing that makes a user pause and ask: “Should this really be out there forever?”

Just one little warning that “This conversation may include personal information.” That's good, but who’ll tell that search engines could index this conversation?

What Needs to Change (And Fast!)?

Let’s be pragmatic. Here’s what OpenAI and its users should consider right now:

For OpenAI:

- Add a clear warning before generating a share link: “This link is public and may be indexed by search engines.”

- Offer a ‘noindex’ option or privacy settings for shared chats.

- Implement auto-expiry for links unless explicitly marked permanent.

For Users:

- Stop treating ChatGPT as a clipboard. If you wouldn’t post it on a forum, don’t paste it into a shared chat.

- Scrub your prompts before sharing.

- Never share API keys, tokens, or internal logs (even for “just debugging”).

And for organizations? This is yet another reminder to educate your teams on data handling hygiene in AI workflows.

Final Thoughts

This isn’t a technical exploit. There’s no malicious actor poking holes in OpenAI’s infrastructure. And that’s what makes it so dangerous; It’s not an attack, it’s an accident waiting to happen.

Security professionals often say that breaches happen when convenience wins over caution. This is one os those cases, where a feature designed for collaboration quietly becomes a data leak vector at scale.

In the age of AI-enhanced workflows, assume that everything you share might be seen by the world…because it just might be.

Want more brutally honest takes like this? At Resonance Security, we cut through the noise, decoding breaches, exposing vulnerabilities, and delivering sharp, easy-to-digest insights that actually help you stay ahead.

Our latest release, the H1 2025 Web3 Cybersecurity Report, does exactly that. It breaks down every major hack from the past six months, highlights the most dangerous threat vectors emerging across the space, and shares the security practices every crypto project should be following right now. No fluff. No filler. Just raw, actionable intel.

The best part? It’s completely free. Grab your copy now: https://resonance.security/top-web3-and-crypto-hacks

.avif)

.png)